Executive Summary: OpenAI Company Knowledge connects ChatGPT to a business’s approved work apps and surfaces answers with citations while honoring existing permissions. Used well, OpenAI Company Knowledge cuts the time from “where is that doc?” to “here’s the decision,” which improves proposal speed, reporting accuracy, and cross-team alignment. The result is faster decisions, fewer rework loops, and measurable return on investment.

What Is OpenAI Company Knowledge (and Why It Drives ROI)

OpenAI Company Knowledge is an AI-powered knowledge layer that works across drives, documents, email, CRM notes, tickets, task boards, and chat channels the team already uses. Instead of switching tabs, the assistant searches authorized sources, synthesizes the answer, and cites where facts came from. Access follows current permissions, so people only see content they’re entitled to view.

For revenue and marketing teams, the value is direct. There are fewer context gaps, faster creative briefs, cleaner post-mortems, and better executive updates. Ultimately, those gains shorten decision cycles and reduce rework, two reliable drivers of marketing ROI.

Action to take today: list three high-friction questions that repeatedly stall progress (for example, “What changed in the Q4 promo plan since last Friday?”). Pilot those first and require cited answers by default.

The 7 Highest-ROI Plays to Try This Week

Below are seven practical workflows that turn this platform into business impact. Each outlines goals, inputs, a simple workflow, and a measurement plan so teams can prove value quickly.

1) Pitch-Ready Client Briefs in Minutes

Goal: transform scattered inputs into a one-page brief that includes objectives, constraints, open questions, and citations.

Inputs: client Slack channel messages, the latest strategy doc, key client emails, and CRM activity.

Workflow:

- Ask: “Create a client brief for tomorrow’s call using the last 14 days of #client-acme, ‘Q4 Brief.docx,’ and the most recent email thread with subject ‘Promo cadence.’”

- Require sections for Objectives, Constraints, Open Questions, Risks, and Next Steps, each with source links.

- Save the output to the shared client folder and tag the account owner for review.

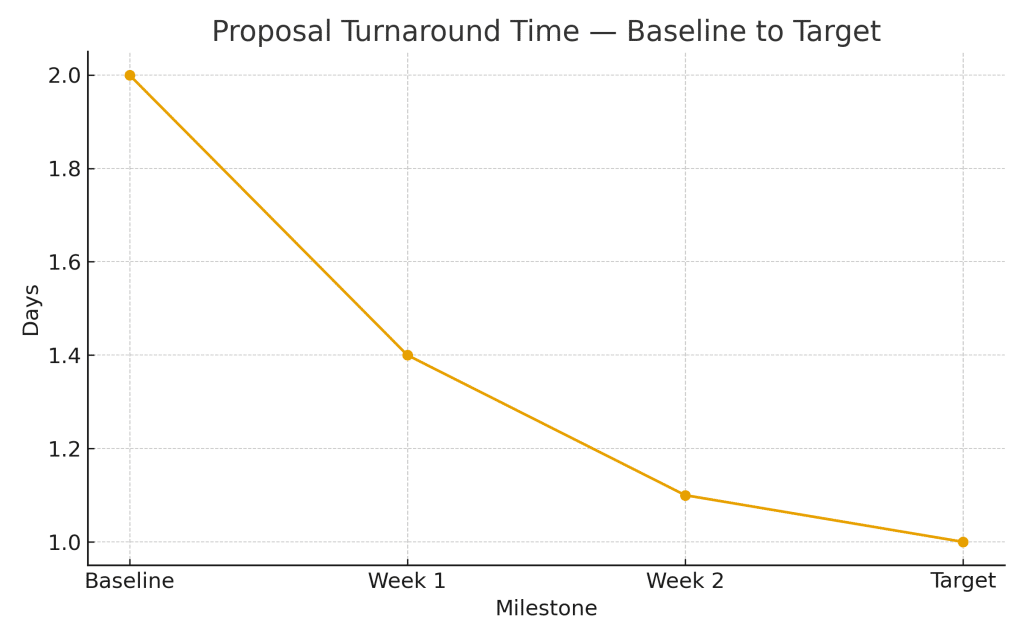

Measurement: target a 40–60% reduction in proposal turnaround time without losing accuracy. Track revision counts and time to client approval.

Real-world scenario: an agency team collapsed six tabs and three threads into a single cited brief. What used to take two hours took forty minutes, and the client approved scope the same day.

2) Campaign Post-Mortems That Don’t Miss Context

Goal: extract wins, losses, root causes, and a next-test matrix backed by evidence. Post-mortems often miss details because key facts sit in different tools. The assistant closes that gap.

Inputs: ad account notes, Slack debrief threads, ticket escalations, and CRM opportunity updates.

Workflow:

- Ask: “Summarize top five learnings from the Back-to-School campaign. Include root causes and link to evidence.”

- Require a “What we’ll test next” matrix with hypothesis, owner, and due date.

- Publish to the campaign folder and share in #marketing-reviews.

Measurement: reduce time-to-insight and increase test velocity. Track the number of experiments launched within seven days of the retro.

Example outcome: the assistant cited a support thread explaining a surge in refund tickets from a specific landing-page variant. That single citation redirected the next sprint and recovered a wasted week.

3) Weekly Executive Rollups with Real Recency

Goal: produce concise weekly updates that surface deltas versus targets, owners, risks, and confidence levels—with citations under each metric.

Inputs: performance dashboards, working docs, Slack launch chatter, and support tickets.

Workflow:

- Ask: “Create a weekly rollup since last Friday on the Q4 launch. Highlight metric changes, owner, and confidence level. Cite the source for each metric.”

- Include a “Risks & Blockers” section with evidence and proposed mitigations.

- Send the rollup to leadership and store it in /Exec/Weeklies with consistent naming.

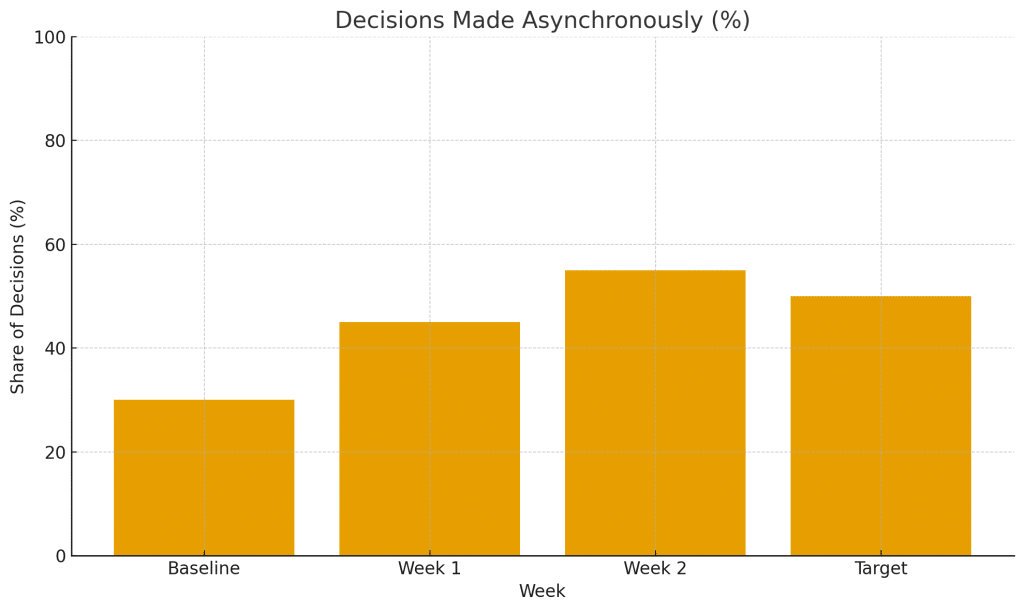

Measurement: shorten review time and catch blockers earlier. Track how many decisions happen asynchronously versus needing a meeting.

Tip: require a one-line confidence note under each metric so leaders know which numbers are rock-solid versus directional.

4) Voice-of-Customer (VoC) Summaries That Drive Roadmaps

Goal: convert scattered customer feedback into themes, representative quotes, and product or offer experiments.

Inputs: support tickets, NPS verbatims, app-store reviews, and the #feedback channel.

Workflow:

- Ask: “Cluster VoC themes seen in the last 30 days. Only include themes with at least three corroborated citations. Provide representative quotes and links.”

- Map top themes to suggested experiments or content updates and assign an owner.

- Review monthly with product and lifecycle marketing.

Measurement: increase the percentage of roadmap items tied to validated insights. Track uplift in conversion or retention for items sourced from VoC.

Example: a spike in “shipping cost confusion” across tickets and reviews triggered a checkout copy test. The fix cut cart abandonment by 8% week over week.

5) Creative QA Checklists Built from Real Misses

Goal: reduce rework by turning historical QA misses into preventive checklists embedded in specs.

Inputs: past QA bugs, #design-qa channel messages, client notes about brand issues, and platform policy rejections.

Workflow:

- Ask: “From the last 90 days of design QA and client feedback, list common failure modes. Create a pre-flight checklist for paid social assets.”

- Attach the checklist to every new creative spec and require a check-off on submission.

- Update monthly as new misses appear.

Measurement: track revisions per asset and on-time delivery rate. Aim to reduce preventable revisions by 25% or more within one quarter.

Tip: include platform-specific checks (aspect ratio, safe areas, alt text) and brand-specific items (fonts, logo clear space).

6) Sales Enablement Sheets for New Offers

Goal: arm sales with a one-pager talk track, objection handling, and competitor counters—each backed by sources from the assistant.

Inputs: feature PRD, GitHub or Linear issues, pricing docs, support macros, and competitive notes.

Workflow:

- Ask: “Create a sales one-pager for the new Starter Plus plan. Include key benefits, three common objections with responses, and three competitor counters with links to evidence.”

- Export to PDF for easy sharing and keep an editable version in the sales playbooks folder.

- Review with sales once per sprint and archive versions with changelogs.

Measurement: monitor win rate for the new SKU and rep ramp time. Add a CRM field to tag usage of the one-pager in closed-won notes.

Tip: require source citations for each claim to prevent anecdotal comparisons.

7) Release Planning that Aligns Product and Marketing

Goal: unify engineering milestones with marketing timelines, risks, and dependencies in one cited plan.

Inputs: GitHub or Linear boards, engineering Slack channels, marketing timeline docs, and content calendars.

Workflow:

- Ask: “Create a cross-team release plan for v2.4 using the engineering board and the marketing calendar. List dependencies, owners, and risk owners. Include ‘untracked work to log.’”

- Publish a single, cited plan with checklists for PR, ads, lifecycle, and support readiness.

- Hold a 15-minute async review; meet only on red items.

Measurement: reduce slipped dates and increase lead time on dependencies. Track how often “untracked work” is caught before launch day.

Quick-Start: OpenAI Company Knowledge Setup in 20 Minutes

Start small yet end-to-end. The goal is one working workflow that proves value within days. This simple setup makes the knowledge layer productive without heavy change management.

- Connect the right sources: shared drives (Google Drive or SharePoint), Gmail or Outlook, Slack or Teams, CRM (HubSpot or Salesforce), ticketing (Zendesk, Intercom, Jira), and task boards. Use SSO and SCIM where possible.

- Pick a “pilot pod”: one marketer, one product manager, one customer success lead, and one analyst. Keep scope tight to speed iteration.

- Create prompt templates: Client Brief, Weekly Rollup, VoC Summary, and Post-Mortem. Save them in a shared folder for reuse and versioning.

- Enable citations: require the assistant to include links for every metric and claim. Store outputs in a shared location so anyone can audit the trail.

- Guardrails: adopt least-privilege access, turn on IP allowlisting if supported, avoid copying raw PII into prompts, and respect per-client spaces.

Measurement: Prove ROI in the First 14 Days

Measure time saved and decision quality. Start with a clear baseline, then compare weekly. Blend hours saved with a loaded hourly rate and note any impact on CAC and LTV when signal exists. The platform should show improving operational and quality metrics within two weeks.

Baseline and Weekly Tracking

- Operational: time to brief, time to report, revision count, number of status meetings, decision latency.

- Quality: percentage of responses with citations, number of errors caught pre-launch, blockers escalated earlier.

- Financial: hours saved × loaded hourly rate; secondary lens on CAC/LTV where attributable.

Pilot KPI Scorecard (Template)

Copy this table into the workspace and update it weekly.

| KPI | Owner | Baseline | Target (14 Days) | Week 1 Actual | Week 2 Actual | Notes / Citations Required? |

|---|---|---|---|---|---|---|

| Proposal Turnaround Time | Account Lead | 2 days | ≤ 1 day | Yes: link to brief and inputs | ||

| Post-Mortem Cycle Time | Growth PM | 5 days | ≤ 3 days | Yes: link to evidence per learning | ||

| Executive Review Time | Ops | 45 min | ≤ 20 min | Yes: rollup stored in shared folder | ||

| Preventable Revisions per Asset | Creative | 1.8 | ≤ 1.2 | Checklist attached to spec | ||

| Decisions Made Async | Leads | 30% | ≥ 50% | Require confidence note per metric |

Governance, Privacy, and Client Trust

Trust accelerates adoption. Make controls visible and consistent so teams and clients can audit how the assistant is used across the organization.

- Permissions model: the assistant surfaces only what a user can already access. Do not expand access for convenience; keep least-privilege by default.

- Admin controls: manage which apps are connected, define group-level access, and verify that compliance logs are available for audits.

- Client spaces: maintain per-client folders and channels using consistent naming, retention rules, and tagging for sensitive assets.

- Prompt hygiene: avoid pasting raw PII. Reference records by ID or link rather than copying data inline.

- Contract language: add a short clause to the MSA/SOW clarifying that an AI assistant synthesizes information with citations and that data access follows existing permissions.

Limitations Today: Simple Workarounds

- Mode switching: when the platform is enabled, web search or media generation may be limited. If external lookups or charts are needed, toggle that mode off, complete the task, then resume the thread.

- Operator habit: make it standard to enable the feature at the start of relevant conversations. A pinned checklist helps.

- Content hygiene: poor source organization creates poor answers. Nominate an owner to maintain a “Source of Truth” index with canonical docs for pricing, calendars, and style guides.

- Ambiguous prompts: vague asks yield generic outputs. Use tight prompts with required sections and time windows (for example, “since last Friday”).

Getting Started: A One-Week Pilot Plan

The objective is a small, auditable win in seven days. Keep scope lean and document everything so the next team can repeat the process.

- Day 1–2: connect apps, verify permissions, and select three high-friction questions (brief, weekly rollup, VoC). Capture baseline metrics.

- Day 3–4: run the three workflows. Require citations for every metric and claim. Store outputs in shared folders with versioned filenames.

- Day 5: compare against baseline. Did turnaround time, revision count, or blocker escalations improve? Decide whether to expand, iterate, or stop.

- Packaging: publish the prompt templates, KPI scorecard, governance checklist, and a “What we learned” note in a single folder for future pods.

Practical Prompts You Can Copy

Tailor these to folder names and channels. Always require citations and a date window so answers are fresh and auditable through the platform.

- Client Brief: “Create a one-page brief for the Acme Q4 Promo using the last 14 days of #client-acme, the ‘Q4 Brief.docx,’ and recent CRM notes. Include Objectives, Constraints, Open Questions, Risks, and Next Steps. Cite sources inline.”

- Post-Mortem: “Summarize the top five learnings from the Back-to-School campaign. Provide root causes and a test matrix (hypothesis, owner, due date). Link to evidence in each bullet.”

- Weekly Rollup: “Since last Friday, list metric deltas, owners, confidence levels, and blockers for the Holiday Launch. Add a Risks & Mitigations section. Include links to spreadsheets or dashboards.”

- VoC Summary: “From support tickets, NPS comments, and the #feedback channel in the last 30 days, cluster themes with at least three corroborating citations each. Provide sample quotes and recommended experiments.”

Case Study: From Scatter to Decision in Under an Hour

A retail brand needed a rapid creative refresh after performance softened. The team used the assistant to assemble a cited brief from Slack campaign chatter, a pricing FAQ doc, and recent A/B test notes. The assistant highlighted two issues: ad copy mismatched the latest promo terms, and a commonly used image failed safe-area guidelines on a key placement.

Within the same morning, the creative lead pulled the QA checklist derived from historical misses, fixed the safe-area violation, and shipped a new ad set aligned to current offers. The weekly rollup documented the change, linked every source, and recorded an 11% improvement in CTR with a modest CPA drop over the next three days. Everything remained auditable through consistent citations.

OpenAI Company Knowledge won’t replace sound strategy, yet it clears the path for it. By unifying context, enforcing citations, and tightening feedback loops, teams shift from speculation to documented decisions. Start with the three workflows that slow progress the most—briefs, post-mortems, and weekly rollups—then expand into VoC synthesis, creative QA, sales enablement, and release planning.

Final Thought

Start small and measure fast. Make evidence the default with citations and shared storage. Keep source hygiene tight and expand into VoC, creative QA, and release planning as results compound. Treat OpenAI Company Knowledge as a system with standard prompts, governance, and a weekly scorecard to sustain ROI.