Facebook will now rate users on their trustworthiness, according to a recent article by The Washington Post. The rating system, which Facebook reportedly created over the past year, measures users’ credibility on a scale of zero to one. Facebook made the rating system to determine whether users who report information as untrue are credible. Facebook found that some people who report information as untrue do so because they disagree with the content or because they are strategically tarnishing the reputations of certain publishers.

“I like to make the joke that, if people only reported things that were false, this job would be so easy!” Lyons told The Washington Post. “People often report things that they just disagree with.”

According to The Washington Post, the trustworthiness score doesn’t represent credibility overall. It is, however, one of many behavioral measurements the platform considers when determining credibility. Facebook also considers users’ propensity to flag content and publishers’ credibility among users.

“One of the signals we use is how people interact with articles,” Tessa Lyons, Facebook’s product manager in charge of fighting misinformation, told The Washington Post. “For example, if someone previously gave us feedback that an article was false and the article was confirmed false by a fact-checker, then we might weigh that person’s future false-news feedback more than someone who indiscriminately provides false-news feedback on lots of articles, including ones that end up being rated as true.”

The Washington Post also says Facebook hasn’t disclosed the criteria it uses to inform scores, and it also hasn’t explained whether it will assign all users a score.

Trustworthy Ratings Are Just One Cog in Fake News Prevention

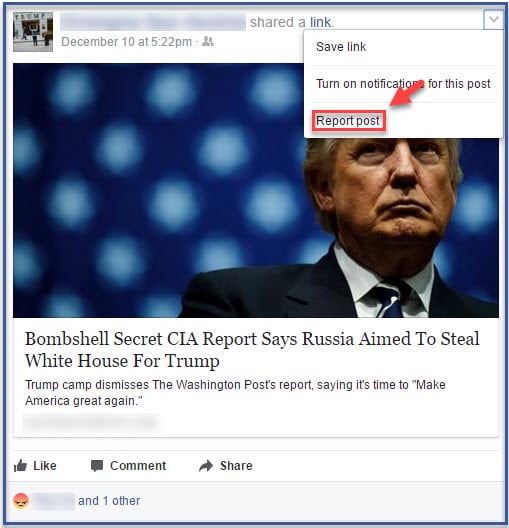

Lately, Facebook has had a difficult time keeping fake news from spiraling out of control on its platform. Fake news became a national threat when the public discovered the platform was intentionally misused during the 2016 US Presidential Election. Russian troll accounts had distributed politically controversial ads to sway voters and evoke negative emotions. Additionally, the Cambridge Analytica data firm delivered manipulated messages to users during Donald Trump’s campaign after it had dishonestly collected personal information from over 80 million profiles.

Responding to the prominence of fake news and the misuse of its platform, Facebook has been implementing new practices and features. Back in February, the company distributed a two-question survey about publishers’ trustworthiness to various users. Prior to that, Facebook announced that it was reducing the number of news sources on News Feed. Additionally, the company increased transparency for pages and ads by labeling electoral and issue ads “Political Ad” and implementing new authorization processes.

And now, Facebook is rating the trustworthiness of its users, ensuring that the posts of publishers who are trustworthy, who distribute factual news, aren’t unfairly deleted or demoted on the platform.

Unfortunately, with such a massive user base (over two billion), Facebook has a difficult task. There is no entirely foolproof approach, so there has to be various counter approaches to ensure checks and balances.

No one is perfect and Facebook is no exception. There is likely always going to be a small loophole in the system of checks and balances. However, the company is clearly doing its best to correct its mistakes and provide a better platform experience for both users and publishers. While fake news will never go away completely, perhaps Facebook’s latest efforts will at least help diminish its presence on the social network. The final result may be a predominantly trustworthy space that fosters healthy discussions.

Written by Anna Hubbel, staff writer at AdvertiseMint, Facebook advertising company